How do you get your firmware builds to your test team?

(Hopefully you have a test team… or at least someone independent who can test your code.)

Do you build locally on your laptop and then email a file? Do they know how to flash it on the device… and have the hardware they need? Do they know how to connect it correctly?

Recently one of my firmware teammates built a (super neat!) browser-based tool. At one point it wasn’t working for me and it needed a fix. So he made the change and I refreshed the browser… and in a matter of seconds the tool was working for me.

I said to him: Wow, that’s a lot easier than deploying firmware, huh?

I didn’t have to download, install, or flash anything. I just refreshed the browser and it was there.

This sort of thing is generally pretty easy (and common!) for web applications, because they’re centrally hosted and can be updated for everyone just by changing the files on the server.

Getting new firmware onto your embedded system is usually more complicated and requires specialized knowledge and hardware.

IDEs often provide this functionality (press F5 to build and load!) but that only works for your local copy of the code with your device connected to your machine.

How do you get this code into devices where other people can use and test it?

The answer is continuous delivery (CD).

CD is the process that automatically delivers our firmware to test systems when we push our code to source control. No manual steps are needed – the code is just there.

Note here that I’m talking about continuous delivery and not deployment. To me, deployment means making the code available to users (like on a website, or to an in-field product). That’s the sort of thing that could be handled by an over-the-air update (if we have one) – but could also be integrated with the CD system!

The plan

If you are using Github for source control, it’s fairly straightforward to add a workflow to deploy your code to real hardware. For the rest of this article, we’ll look at how to set up a very basic example of such a system. It uses just a few components, shown here:

In this example we’ll configure Github actions (by defining “workflows”) to run when we push code to our repository.

Workflows are defined by adding special files to our repository which include 1) commands to execute and 2) which “runner” they should be executed on. Typically, runners are short-lived (cloud hosted) containers – e.g. an Ubuntu instance – created by Github as needed for your workflows.

In this case, first the application will be built in a cloud hosted runner. Then we’ll use a “self-hosted runner” on a Raspberry Pi to do the flashing on the target board (an STM32F303 discovery board here). The self-hosted runner allows us to use Github Actions to define workflows that run on our own hardware.

We’re using a Raspberry Pi in this example only because it’s small, inexpensive, and convenient. We could use any of the platforms supported by the Github runner application (Linux, Windows, or macOS). This application doesn't require any specialized hardware – just a USB port that we’ll use to connect to our programmer/debugger.

In this example, we’ll use an STM32F303 Discovery board as our target. Our actual target doesn’t matter, but this board is fairly common (and I happened to have one here) and it has an integrated ST-Link that we can use with OpenOCD on the Raspberry Pi for flashing.

The application that we’ll use for testing here is a simple LED blinky that has been adapted to run on the STM32F303 Discovery board.

Configure the Raspberry Pi to flash the target

The STM32 Discovery board has a built-in ST-Link programmer that we can use for flashing via USB from the Raspberry Pi. In this case, we’ll use both OpenOCD and GDB – so we’ll need to install them on the Raspberry Pi.

Install GDB with:

sudo apt install gdb-multiarchInstall OpenOCD with:

sudo apt install openocdNote: By default, the ST-Link is assigned to the plugdev group when connected. You need to add your Raspberry Pi username to plugdev to have permission to access the ST-Link:

sudo usermod -a -G plugdev <your-rpi-username>There is a GDB script file in the repo (gdb-st-link-flash-and-exit) that can be used to flash the board with an elf file produced by our build process. The script starts OpenOCD (which starts a GDB server) and then connects to it, loads the file, resets the board, and exits. The script looks like this:

# Start openocd and connect to it directly via pipe

target extended-remote | openocd -f interface/stlink-dap.cfg \

-f target/stm32f3x.cfg \

-c "gdb_port pipe; log_output openocd.log"

load

monitor reset

quitYou use this to flash the target board from the Raspberry Pi with:

gdb-multiarch -x gdb-st-link-flash-and-exit <elf-file-name>Create a workflow to build the firmware

Before we can flash the board, first we need to build the application. We want this build to happen automatically every time we push code to the repo. To do this, we’re going to define a workflow in Github Actions.

The workflow will go in the repository in the .github/workflows folder, in a file named build.yml. The .github/workflows folder is a special location where Github looks for workflows to execute. The YAML workflow file can be named whatever we want – and we can have many workflows defined in many different files.

The workflow is a YAML file that must define a few different items for the workflow to run correctly:

The name of the workflow.

When to run this workflow – here it’s configured to run on every push to the repo.

A job that will do the building.

The type of environment in which to run the workflow – in this case, we’re running it on the latest version of Ubuntu.

Install the toolchain (cross compiler).

Check out the code.

Build the application.

Upload the target file to Github.

name: Build target binary

on: [push]

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Install toolchain

run: |

curl -O https://armkeil.blob.core.windows.net/developer/Files/downloads/gnu/12.2.rel1/binrel/arm-gnu-toolchain-12.2.rel1-x86_64-arm-none-eabi.tar.xz \

&& tar -xf arm-gnu-toolchain-12.2.rel1-x86_64-arm-none-eabi.tar.xz -C /opt \

&& echo "/opt/arm-gnu-toolchain-12.2.rel1-x86_64-arm-none-eabi/bin" >> $GITHUB_PATH

- name: Check out code

uses: actions/checkout@v3

- name: Build application

run: |

cmake . -B build/Release --toolchain cmake/arm-none-eabi.cmake -DCMAKE_BUILD_TYPE=Release \

&& cmake --build build/Release

- name: Save target binary

uses: actions/upload-artifact@v3

with:

name: f303

path: build/Release/f303Now, if you push this file to the repository you will see this action start running. When it’s done the f303 build artifact (elf file) will be available to download from Github.

To see your workflows, click the Actions tab at the top of the repo page.

Clicking on one of the workflow runs will take you to the details page, where your can download the build artifact:

You can also click on the the build job allows you to inspect all of the output from every step of the workflow run:

Add a new self-hosted runner

Warning: Github highly recommends that you do not use self-hosted runners on public repositories as this can be a security risk – allowing forks of your repository to use your self-hosted runner to execute potentially dangerous code on your runner machine.

Now that the firmware is building in the Github Actions workflow, we just need to setup a workflow to run on the Raspberry Pi and flash the target. The first step is to add a new self-hosted runner to the repository – this is the one that we are going to host ourselves (on the Raspberry Pi).

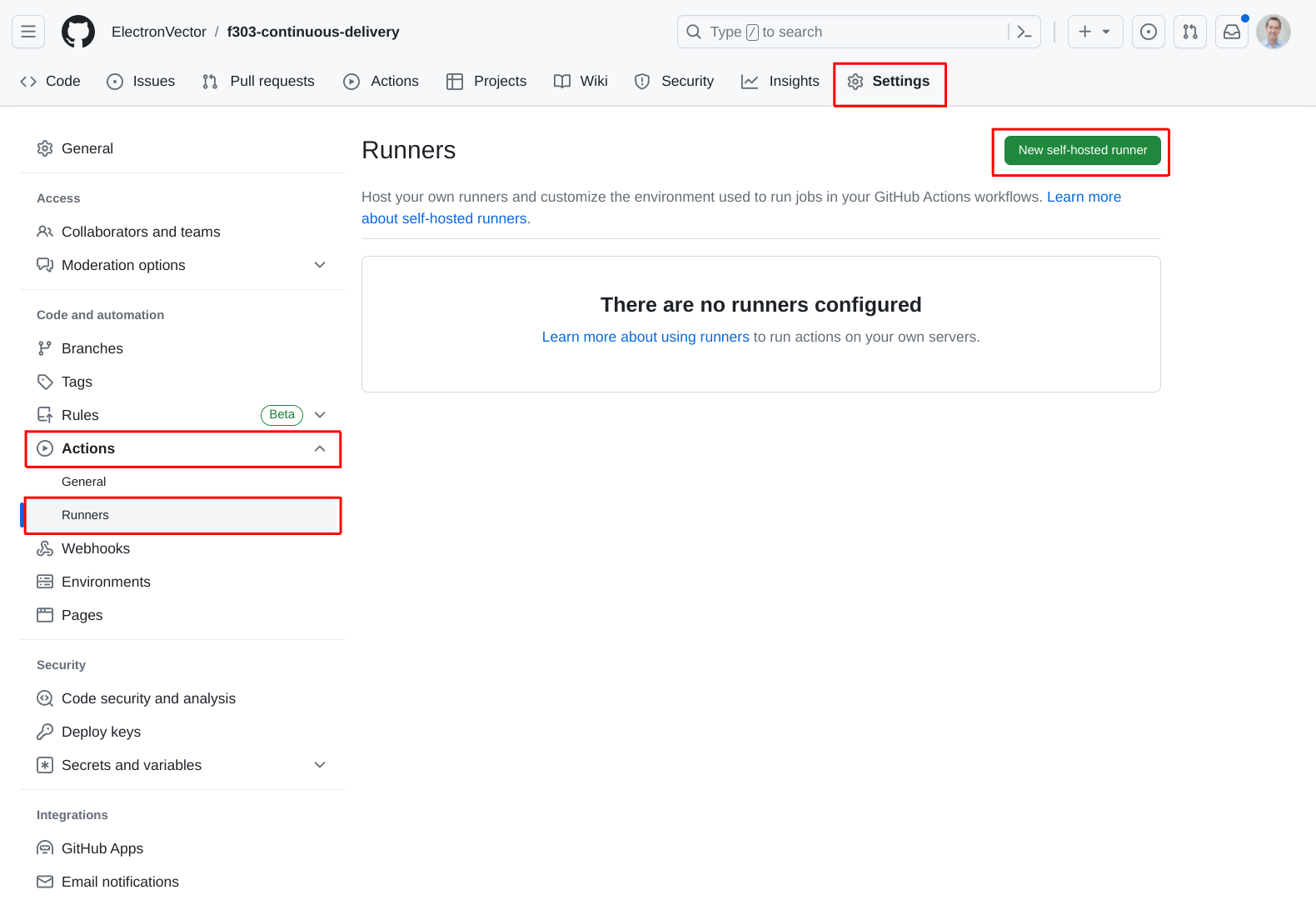

To add the self-hosted runner, click on the Settings tab at the top of the repo page, then select Actions and then Runners. Finally, click the New self-hosted runner button in the upper right:

From here, you need to select the OS and architecture of the target. I’m actually using a 64-bit installation on my Raspberry Pi 4, so I’ve selected the ARM64 architecture. This page also conveniently gives us all the instructions we need to install the runner on our Raspberry Pi.

Note: I’ve redacted the token that Github provided for me here. When you set this up you’ll get a token which you’ll use to connect your self-hosted runner to the repository.

Install the runner on the Raspberry Pi

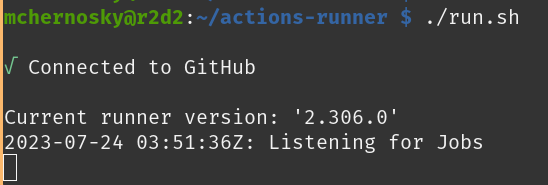

Now we just need to follow the steps Github just provided above to install the runner application on the Raspberry Pi. When you start it, it should look like this:

Modify our workflow to flash the target from the Raspberry Pi

Now that we have the Github runner application installed and running on the Raspberry Pi, we can update our existing build.yml workflow to include a new job to do the flashing of the target.

First we’ll want to rename the workflow file from build.yml to something like build-and-flash.yml (naming is important!) to indicate its expanded functionality.

Then we’ll want to add a new job to the workflow file that will execute the flashing commands on the Raspberry Pi. This job requires just a few configuration items:

Configure the job to run on the self-hosted runner.

Configure the job to run only after the build is complete (be default jobs would be run in parallel).

Check out the code. We actually only need the code in this case to get the

gdb-st-link-flash-and-exitcommand file that we’ll use with GDB to do the flashing.Download the binary file saved during the build job.

Flash the target with GDB.

The entire build-and-flash-workflow.yml will look like this:

name: Build and flash target binary

on: [push]

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Install toolchain

run: |

curl -O https://armkeil.blob.core.windows.net/developer/Files/downloads/gnu/12.2.rel1/binrel/arm-gnu-toolchain-12.2.rel1-x86_64-arm-none-eabi.tar.xz \

&& tar -xf arm-gnu-toolchain-12.2.rel1-x86_64-arm-none-eabi.tar.xz -C /opt \

&& echo "/opt/arm-gnu-toolchain-12.2.rel1-x86_64-arm-none-eabi/bin" >> $GITHUB_PATH

- name: Check out code

uses: actions/checkout@v3

- name: Build application

run: |

cmake . -B build/Release --toolchain cmake/arm-none-eabi.cmake -DCMAKE_BUILD_TYPE=Release \

&& cmake --build build/Release

- name: Save target binary

uses: actions/upload-artifact@v3

with:

name: f303

path: build/Release/f303

flash:

runs-on: self-hosted

needs: build

steps:

- name: Check out code

uses: actions/checkout@v3

- name: Download target binary

uses: actions/download-artifact@v3

with:

name: f303

- name: Flash target

run: gdb-multiarch -x gdb-st-link-flash-and-exit f303After pushing this code, the build-and-flash workflow will show two different jobs (both of which can be inspected independently).

When this workflow run is complete, the code has been built and flashed onto the target successfully.

In summary

This is a very basic example of how to use cloud-based Github Actions to flash real hardware. This makes it possible to deliver firmware builds – as soon as new firmware is pushed – to whoever might need it. This could be useful for internal testers that want to start as new firmware is ready.

Next steps

Note however that there are a lot of things that we didn’t do in this example. Depending on the situation, to make this more useful we might do things like:

Configure the build and flash workflow to run only on pushes to a certain branch, e.g. some sort of integration branch. This would make it easier for multiple developers to work together.

Configure different types of target boards, with different hardware. This could be useful if there have been a few different revisions of the target hardware. Loading automatically on all the different revisions would make backwards compatibility testing much easier.

Configure multiple target boards – each to be flashed from pushes to different branches. This could allow us to have a development as well as a release branch. This is more aligned with common web development practices.

Additionally, it makes sense to do at least a couple of things to the Raspberry Pi:

Configure it for additional security. We didn’t cover the Raspberry Pi setup, but it makes sense to be a bit more cautious with security.

Set up the runner application to run as a service. This will start the service whenever the Raspberry Pi is powered on so it’s always available.

There is also a change we could make to make shorten the workflow execution time. Currently, the Github-hosted runner that does the build has to be reconstructed each time it is run. This includes downloading and installing the toolchain which can take up to 30 seconds. Instead of rebuilding the runner environment for each run, using a pre-built Docker image would require less time.

Sample source code for this article can be found here: https://github.com/ElectronVector/f303-continuous-delivery